- Introduction to Dimensional Metrology

- Basic Terminologies in Metrology

- Error and Uncertainty

- Accuracy and Precision

- Resolution of Measuring Instruments

- Trueness of measurements

- Repeatability and Reproducibility

- Confidence Levels in measurement results

- Tolerance in manufacturing and inspection

- Validation vs Verification

- Calibration practices

- Accreditation of laboratories and bodies

- Certification processes

- Frequently Asked Questions (FAQs)

Introduction to Dimensional Metrology

NASA mars climate orbiter (1999) successfully completed 99% of its journey, however, it met with an accident in the mars atmosphere and was blown up.

The reason behind was apparently insignificant mistake of using different units used for navigation, one team was using Pound-seconds and the other was using Newton-seconds. The mistake was small but the cost of it was very large. This indicates how important it is to measure the things and measure them truly.

Explore our Comprehensive Guide to Mechanical Parts Inspection

Similarly, in an engineering company it is vital to measure everything and to make sure things are made right for the first time. In mechanical design the crucial things to be measured are the dimensions.

These dimensions represent the design intentions of the design team and indicates the directions / guidelines for the manufacturing and inspection teams as well. The word metrology comes from ‘metron’ meaning measurement and ‘logos’ meaning study.

In this article we will discuss the core foundations of dimensional metrology.

Basic Terminologies in Metrology

Error and Uncertainty

In dimensional metrology error is the difference between the measured value and the true value of the thing being measured. For example we are measuring 10 mm diameter of a rod. The drawing value states that it should be 10 ± 0.1, the calliper reads it 9.9. Now error is the difference between and true and measured values meaning here 0.1 is the error in our example.

Uncertainty is the parameter associated with the results of the measurements, that characterizes the dispersion of the values that could be associated with the measurand. In the example above, if the reading was taken at 30°C and there was parallax error during taking the reading, there is an uncertainty associated with this measurement and it is usually stated as a tolerance band with the measured result e.g. 9.9 ± 0.01.

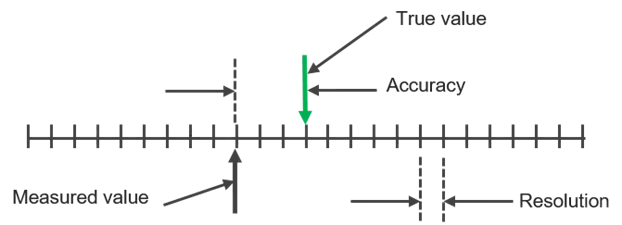

Accuracy and Precision

Accuracy is how close your measurement is to the target being measured or the true value. If a weighing scale reads 100.1 g for a true weight of 100 g. The reading is accurate.

Precision indicates the extent to which the results are consistent when repeated measurements are taken while keeping the conditions of the measurements similar.

Resolution of Measuring Instruments

The resolution of an instrument is a quantitative expression of the ability of an indicating device to distinguish meaningfully between closely adjacent values of the quantity indicated. The resolution of different measuring instruments. For a micrometre the resolution may be 0.01 mm whereas, a vernier calliper may have a resolution of 0.02 mm.

Knowing the resolution is crucial to determine what type of measurement is being taken and what resolution is required from the instrument. A size less than the resolution of the measuring instrument can not be measured.

Trueness of measurements

Trueness is a similar concept to accuracy, but while accuracy refers to the closeness between an individual measurement and the true value, trueness refers to the closeness of agreement between the average value obtained from a set of test results and the true value.

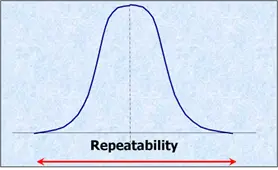

Repeatability and Reproducibility

Repeatability is the closeness of agreement between repeated measurements of the same thing, carried out in the same place, by the same person, on the same equipment, in the same way, at similar times.

Reproducibility is the closeness of agreement between measurements of the same thing carried out in different circumstances, e.g. by a different person, or a different method, or at a different time

Confidence Levels in measurement results

Make a number of measurements of something – like the concentration of carbon monoxide gas in your vicinity – True value lies between, say, 9 parts per million (ppm) and 11 ppm – probably (or 10±1 ppm). You could not be sure that this was the case, but you could express your confidence that it is. So you might decide that you are 90% confident that the answer lies between those values.

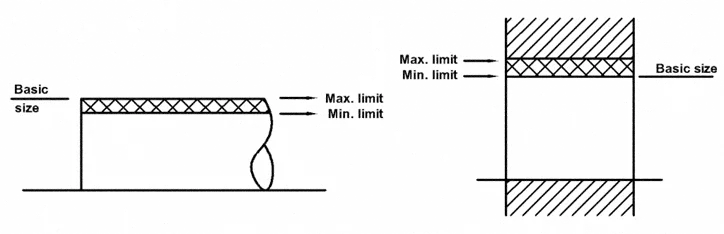

Tolerance in manufacturing and inspection

A tolerance is the maximum acceptable difference between the actual value of some quantity, and the value specified for it.

Validation vs Verification

Validation is confirmation that some aspect of a measurement process is fit for purpose. Whereas, the verification is making sure that the measurements are made to ensure conformance to the specifications.

Calibration practices

Comparison of an instrument against a more accurate one (or against a reference signal or condition), to find and correct any errors in its measurement results.

Accreditation of laboratories and bodies

Accreditation is the independent, third-party evaluation of a conformity assessment body (such as certification body, inspection body or laboratory) against recognised standards, conveying formal demonstration of its impartiality and competence to carry out specific conformity assessment tasks (such as certification, inspection and testing).

Certification processes

Certification is the provision by an independent body of written assurance (a certificate) that the product, service or system in question meets specific requirements.